What makes Google love your website, and why does it matter in the first place?

Here’s why.

Most people only click the first 1-3 organic results with much fewer searchers clicking 4th to 10th and almost nobody going to the second page.This means that the top three results are the only ones that most people see, once they get past Google’s ads and other features.

So if your competition is ranked higher than you in these spots, chances are good that they’re going to get more leads and more sales. That’s obvious, right?

This means that your site needs to be optimized carefully and strategically.

When Google’s robot looks at your website, it considers a lot of different factors, including the content on the page, the URL the page is on, etc. On the whole, Google says there are 200+ ranking factors (both on-page and off-page) that they pay attention to.

Thus, since we don’t know what most of those factors are, we try to optimize what we know and the most important thing is to make your site easy for Google to index because if Google can’t figure out what your site is about or its features, then they aren’t going to prioritize your pages.

With that in mind, here are 10 things Google loves to see on every website that are also proven to improve your organic search rankings.

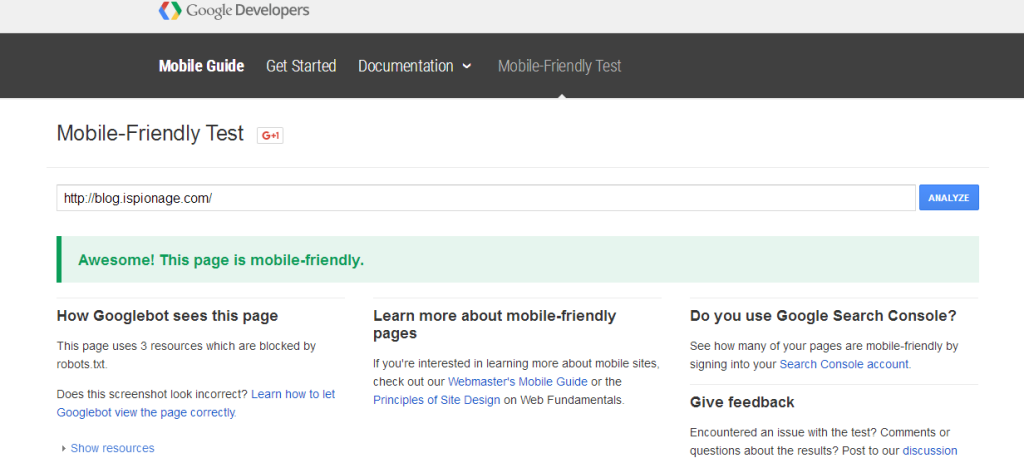

#1: Mobile Friendliness

The primary way people now access the web is through a smartphone, but did you know that Google keeps separate search rankings depending on whether you’re searching with a smartphone or not? It’s true. Google prefers pages that are “mobile friendly,” which means they look good and are easy to navigate on mobile devices.

Google even has a free tool that can help you find out if your pages are mobile friendly. If you’re site isn’t mobile friendly, getting there needs to be a top priority. A new update to Google’s algorithm came out in May that boosted mobile friendly pages even higher in the rankings.

#2: Sitemaps

Sitemaps are a way of telling Google what to expect when it tries to index your site. Think of it like an old phone directory that makes it easier for Google to index all of your pages.

Google loves to see sitemaps.

If they can’t find one, they have to crawl your site through the available links, which means that if there’s an area of your website that isn’t accessible through links (which is itself bad), those pages won’t get indexed without a sitemap.

Google also prefers to see XML sitemaps. Most CMS systems can automatically create their own sitemaps, or you can use a plugin to generate one. There really isn’t any need to build one by hand since so many plugins are available, but you can confirm whether your tool is creating a valid sitemap by using a validator like this one here or simply going to Google Webmaster Tools and submitting/testing a sitemap.

If your sitemap validates and you want to signal to Google that you’re ready for indexing, you can submit your sitemap to Google Webmaster Tools. This is a great step to do if you’ve spent a lot of time revamping your site behind the scenes and are ready to go live.

Depending on your site design, you may also want to consider making an HTML sitemap. These maps are meant for humans. HTML sitemaps are good for sites that have a lot of dynamic content, providing a list of all the pages on the site by hierarchy, which can help some visitors.

However, if you make an HTML sitemap you’ll need to put in a brief description of each page. This prevents Google from thinking you’re building a link farm. If you have a very large map, you may want to break it up into multiple pages by major categories. Your XML sitemap can also serve as a useful template for a separate HTML sitemap.

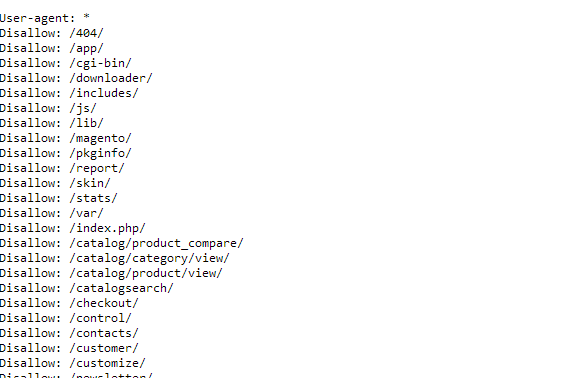

#3: Robots.txt, Canonical Tags, and Pagination

Google hates duplicate content, but some sites like e-commerce sites often have multiple links pointing to the same information. The old way of doing this is to use a robots.txt file, but there are problems with doing it this way.

Let’s say you have two URLs pointing to the same content, and you have external sites linking to both pages. If you tell Google not to index one of the sites, the crawler won’t measure the value of the links coming to the blocked site.

Google and other search engines came up with a solution known as the canonical tag. Canonical tags will tell the crawler that the copy of the content with that tag is the correct one, and essentially pass the link juice of all external links to the right page. Canonical tags are a must for e-commerce sites that often have the same or almost the same (think of filtering products) information in multiple places.

These are not the only options, but they work for most sites. Robots.txt and canonical tags are related to the larger topic of pagination, or how your content is spread about on multiple pages on your website. This step is a little bit more complicated but very important in making sure that you don’t get penalized for duplicate content on your site.

#4: Site Speed

One statistic we see often is that the human attention span is only 8 seconds now thanks to the internet.

It’s not really true; we’re just a lot more impatient. We bounce off pages fast if they don’t quickly load. This is one reason why Google started to bump down pages that load slowly, or better said, to rank faster loading pages higher.

This means your site absolutely must load quickly, especially for smartphones.

Google has a tool to help you measure page speed for your site so you can tell if you’re too slow for their tastes. It will rank your site for both mobile and desktop and give you tips on how to improve the speed. If you want to go a little more advanced then consider going for Accelerated Mobile Pages.

#5: SSL Certificates

You’ve probably seen a URL that starts with https by now, but in case you haven’t noticed, your bank website is certainly that way. This means that your website is protected by an SSL certificate.

Google loves sites that are secured this way and has indicated they want all websites to use the technology.

However, migrating a standard site to one protected by SSL can be tricky. The good news is that Google has a great write-up about what you need to know to pull it off on this page.

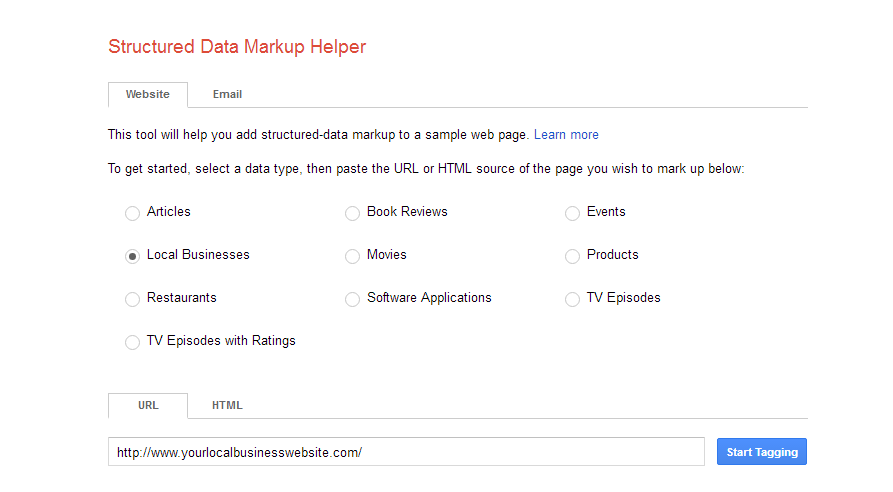

#6: Structured Markup

Have you noticed that some Google links near the top have photos, ratings, reviews, photos, and a lot of other extra features?

The reason they do is that they’re using structured markup. Structured markup, also known as schema codes, are content annotations so that web-crawlers can understand particular pieces of information. For instance, you can tell Google that this piece of text is a product name, a review, a news article, a piece of contact information, etc. Google then uses this markup to build up those fancy search results.

Structured markup is most important for local businesses if they want to be found by Google. If you want to get started with this, here’s what you need to know. Read the overview, then skip down to Enable Rich Snippets and read everything else.

#7: Good Content Quality

Good structure and technical back end aren’t the only things that make a page rank high. The content has to be good too. And good, from Google’s perspective, means several things:

- Unique content

- Content long enough to get across the information

- Regular updates

- Rich enough for today’s content standards (includes pictures, video, etc.)

- Clear enough so it’s easy to navigate

In short, content should be easy to understand, clear to read, and not too old. Google also seems to prefer longer content, something Neil Patel writes about in this article about why 3000+ word articles get more traffic.

#8: Citing Your Links

Google’s mission is to organize the world’s information, and that includes citations.

While we don’t have to put in a formal citation like you remember from college, Google appreciates it when you credit your sources. They even mention it in their content grader guidelines.

Thus, if you use source material, link back to the original story in order to get more SEO points from Google.

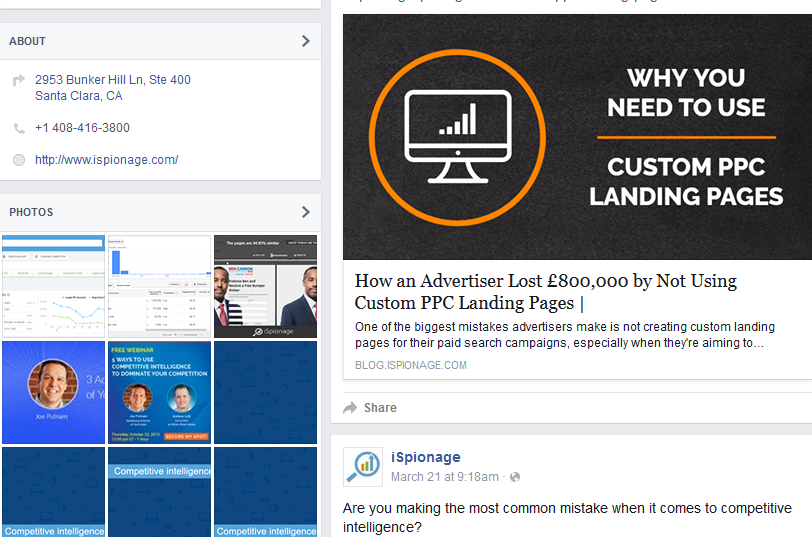

#9: Make It Social

We don’t precisely know how much Google is weighing social signals for page rankings, but we do know that they are looking for them.

Thus, it’s important that you provide a way for people to share your content on social media. The easiest way to do this is to include social sharing buttons on your posts. Depending on your company, you may also benefit from keeping an active social presence. This is especially important for B2C companies that want to interact with people on Facebook and Twitter.

#10: Titles and Meta Descriptions

Google says they don’t use titles and meta descriptions for ranking, but they’re quite important for readers that want to know what a page is about. Titles need to be under 60 characters to prevent Google from cutting them off. Meta descriptions should describe what the page is about and be between 150 and 160 characters. Longer meta descriptions will get cut off, and shorter ones aren’t necessarily better.

If you don’t put in a meta description, Google will try to pull one from the text. Sometimes this is good enough for a page that’s trying to target a long-tail keyword, but it’s good practice to write your own titles and meta descriptions for all your pages in order to improve click-through rates in organic search results.

Just the Tip of the Iceberg

These tips are just the tip of the SEO iceberg when it comes to optimizing your site. There are myriads of other factors like:

- Building quality backlinks

- Measuring Google Analytics for SEO performance

- Modern UX design

- Internal linking

- Image tagging

- New Google features (e.g. My Business)

The tips in this article will give you a solid basis to build on for the rest of your SEO efforts. If the fundamentals aren’t in place for Google to crawl your site, later techniques like link building aren’t going to work as well as they could. So be sure to check and see if your site is using the tools we mentioned in order to see if your site is one that Google will love.

Author

Chris Hickman is the Founder and CEO at Adficient with 14 years of experience in search marketing and conversion optimization. In 2006 founded GetBackonGoogle.com helping businesses and websites suspended in Adwords to get back on Google.

Chris Hickman is the Founder and CEO at Adficient with 14 years of experience in search marketing and conversion optimization. In 2006 founded GetBackonGoogle.com helping businesses and websites suspended in Adwords to get back on Google.